![[jms1]](../images/jms1-150x200.png)

Me, 2023-11-14

jms1.info

This site is a collection of random documents that I've written over the past 20+ years. Many of them are technical, but some will be related to non-technical things that I happen to be interested in. I'm trying to keep them organized by topic, as you can see in the index to the left.

One of the reasons I'm putting them here is so that I know where to find them. I've found that I can't hold every little detail about everything in my brain anymore, so if I write things down, I'll be able to go back and refresh my own memory when I need to.

Another reason is that people have asked me about a lot of these topics, so having them on a web page lets me give a quick answer and include a link where they can read more information, rather than having to spend a bunch of time answering the same questions over and over again.

And if other people find the information on this site useful, then ... I'm glad I could help.

Notes

Some pages may not be complete.

The first part of how I add pages to this site is to just copy an existing Markdown file. A lot of the original files I'm copying here will contain very basic bare-bones information, enough for me to understand (usually), but possibly not useful to others. (Part of why I'm adding them here is to add the more human-friendly explanations, both for others, and for myself a year from now when I've forgotten all this.)

When I do this, my intention is to go back and add more detailed information to those pages, but I don't always remember to do it - especially if I'm in a hurry.

If you find one of these "bare bones" pages and need more information, feel free to let me know.

Some of the links on the left may not exist.

These are pages I'm planning to write, but haven't done so yet.

There's not a whole lot of content here.

This is starting to not be the case anymore, but as I mentioned above, I've been writing down and keeping random notes for over 20 years. I started the current version of this site in 2024-06, it's going to take a while for me to find, organize, prioritize, and write the pages here. Plus I'm doing this in my spare time, I have a full-time job which keeps me pretty busy and, most days, makes me not want to even look at a computer when work is done.

My Other Sites

These are some other public web sites that I've written over the years.

-

jms1.netis my original "home page". It started off containing "everything", but over time I found that certain topics were taking enough space to justify moving them to their own sites. -

qmail.jms1.netis my web site about Qmail. When I started using qmail I took several other patches and combined them into a single patch, and I put the patch on thejms1.netsite. People started using my combined patch and asking questions about it, so I moved all of the qmail-related content to its own web site.I was making my living as an independent contractor at the time, and unfortunatlely, since returning to the world of full-time employment, I haven't had time to maintain the site (or the combined patch). If you're building a mail server I still recommend qmail, but I don't really recommend my own combined patch anymore, because it is so far out of date.

-

kg4zow.usis my site about Amateur Radio (aka "ham radio"). I'm not as active on the air as I used to be, partly because I moved to a smaller city which doesn't have as many ham radio operators around, and partly because I don't have the time. -

remarkable.jms1.infois a site I started for information about the reMarkable tablets. It's a Linux-based e-ink tablet with a textured screen that I find really nice to write on. One of the things I really like about it is that it allows SSH-as-root out of the box. I've been writing my own programs to work with them without using reMarkable's cloud service. -

jekyll.jms1.infowas my first attempt at writing this site. As the name suggests, I was using Jekyll to manage the content, but in 2024-06 I decided to re-do the entire site using mdbook, since I'm a lot more familiar with it (I use it to maintain half a dozen internal web sites for$DAYJOB), and because it's a lot easier to install, configure, and update on a new workstation. Plus, it makes more sense to me to organize the pages by subject rather than by date.

Contact

The best way to reach me is to email jms1@jms1.net. If your message bounces (my server does some pretty aggressive spam-blocking), change net to me - that will also reach me, but it works through Apple's servers. Note that I don't always check personal email every day, so if you email me and I don't respond, please be patient.

If you need an immediate response for some reason, or if you've found a problem with the information on this site, you can use Keybase chat. My username there is jms1.

⚠️ This is not an offer to provide free consulting or support services.

My idea of what constitutes an emergency is probably not the same as yours. For example, if your mail server is down, that almost certainly doesn't affect me at all, unless you're offering to pay me for my time.

About this site

I'm writing this site as a collection of Markdown files, converting them to static HTML files using mdbook with some customizations, tracking the changes using git, and hosting the finished site using Keybase Sites.

Clockwork Pi uConsole

The Clockwork Pi uConsole is a handheld Linux computer, made by Clockwork Pi.

Useful Links

- Clockwork Pi - manufacturer of the uConsole

- HackerGadgets - maker of several popular expansion kits for the uConsole

- Clockwork Pi forums - discusson forum for users of the uConsole and other Clockwork Pi hardware

- uConsole World - blog site with articles and tutorials about the uConsole

Hardware

The uConsole is sold as a kit, which includes ...

- a metal frame, that the parts mount into

- a 5-inch 1280x720 display

- a keyboard with a directional pad and "gaming" buttons (A/B/X/Y/Select/Start)

- a trackball with L/R mouse buttons

- a pair of small internal speakers

- a set of circuit boards to connect the various components together

- metal front and back covers

- an external wifi antenna, meant to be attached to the outside of the case

No soldering is required. All parts are secured to the frame using M4 hex-head screws, and the kit comes with a 2.5mm hex driver (aka "allen wrench"), which is the only tool needed to assemble the unit.

The main board has a single USB-A port (USB 2.0), a USB-C port (for power input only), a Micro-HDMI port, and a 3.5mm headphone jack. There is room inside the unit for an expansion board, which may have its own external connectors on the other side of the unit.

The kit can can be ordered with one of several "core" boards containing an ARM- or RISC-based CPU board, or with no "core" (for people who may already have a Raspberry Pi Compute Module 4/5). I ordered mine with a Raspberry Pi Compute Module 4 core.

It can also be ordered with a 4G Cellular/GPS expansion board, with its own antenna meant to be attached to the outside of the case.

Open Source

The design is all open-source. The uConsole repo on Github contains schematics for the circuit boards, source code for the keyboard firmware, and information about how they built the kernel with the correct modules for the uConsole hardware.

Their web site sorta says that the design files for the frame and front/back covers should be there as well, but I don't see them in this repo (or in any of their repos) as of 2025-12-31.

Batteries

Batteries are NOT included with the kit. This is due to safety regulations around shipping lithium batteries.

The uConsole is powered by a pair of 18650 rechargable Li-ion batteries, with charging circuitry included on the main board. The battery holder within the unit can hold either flat-top or button-top batteries.

⇒ The Batteries page has more details, including notes about which batteries to order and which ones to avoid.

Power Supply

A power supply is NOT included with the kit. This is probably because different parts of the world use different power connectors and voltages. It uses a standard 5V USB-C connector.

Most USB-C chargers for mobile phones or tablets should be usable with the uConsole, however you need to be careful when using "high power" adapters designed for laptops.

⇒ The Power Supply page has more details.

Cases

Coming soon.

Below is a quick list of what I plan to include on the page when I write it.

- USA Gear case

- has very little room for accessories (designed for a camera display?)

- semi-rigid, not solid

- Pelican 1200

- has more room

- SOLID

- other colours available (black, tan, orange, yellow)

- more expensive

- has a lifetime warranty

Power

Indicators

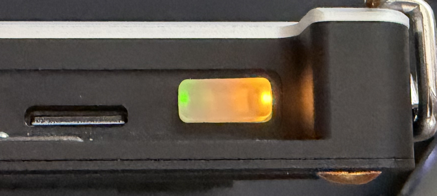

The uConsole comes with a translucent white rubber button for power. The button is translucent because there are two LEDs on either side of the button itself, which can be seen through the button:

-

A green LED, which indicates that the uConsole's power is on.

-

A yellow LED, which indicates that the uConsole is charging the internal batteries.

Power Supply

The uConsole requires a 5V 2A power supply with a USB-C connector. The power supply can be used to charge the batteries, and can also be used to power the unit when batteries are not installed.

A power supply is NOT included with the kit. Most USB-C chargers for mobile phones or tablets should be usable with the uConsole, however ...

The uConsole does not perform USB-PD power negotiation. It expects the USB-C port to receive 5V power, and can draw up to 3A. The highest I've seen it draw is 1.8A, or 5W). Chargers which only supply 5V, or which correctly implement the USB-PD power negotiation process, should work with the uConsole.

What this means is, any USB power supply which only supplies 5V, or any newer power supply which can supply more but correctly implements USB-PD, should be usable with the uConsole. Be aware that some power supplies may not negotiate USB-PD correctly and can damage the uConsole, as this forum post illustrates.

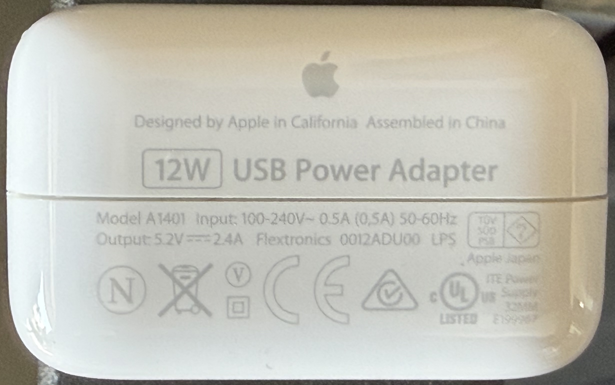

-

This power supply (I think from an old iPad) only outputs 5V, up to 2.4 A. My uConsole works just fine with it.

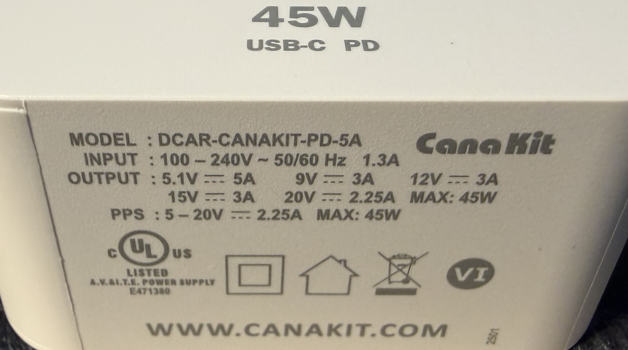

-

This power supply (from a newer iPad Pro) is the one I carry in the box with my uConsole. It can output 5V or 9V, up to 3A. It is marked as supporting USB-PD.

-

This power supply came with a Raspberry Pi 5 kit. It isn't explicitly marked as supporting USB-PD, however the list of voltage levels is identical to the list of voltage levels supported by USB-PD. A quick test shows that it doesn't try to supply more than 5V to the uConsole, and the uConsole was able to charge the battery while plugged into it.

Batteries

The uConsole is powered by a pair of 18650 rechargable Li-ion batteries. The battery holder within the unit can hold either flat-top or button-top batteries. The main board has the necessary circuitry to charge the batteries, so you don't have to remove them in order to recharge them.

The batteries are connected in parallel, which means that it will run with only a single battery installed. It also means that if you need to replace the batteries, you could theoretically swap them out one at a time while the system is running, however this is not recommended.

Batteries are NOT included with the kit. This is due to safety regulations around shipping lithium batteries.

You probably won't find these batteries in a normal retail store. I've seen them at a dedicated battery store, and apparently most "vape" shops sell them as well (apparently vape pens use them?) They can also be ordered from suppliers like Amazon or AliExpress, however you need to be careful not to order "fake" batteries which don't have the capacity they claim.

⚠️ Battery Capacity

The physical and electrical characteristics of Li-ion batteries limit the capacity of a 18650 cell to about 3600 mAh, with the "normal" capacity being 2500-3000 mAh. If you see batteries advertised with higher capacities (such as 5000 or 9900 mAh), be aware that the seller is probably lying about the capacity, and their claims should not be trusted.

I admit, I fell for this. In 2025-01 I bought some batteries which were advertised as having 5000 mAh capacity, for a different device (an Arpeggio mini-synth, if you're curious). The batteries do work, however it was the first time I had ever used 18650 cells so I didn't realize that only two hours of run-time wasn't normal.

I have since ordered a set of higher quality batteries (links below), with a charger that has a capacity tester function. The new batteries all test between 2890 and 2960 mAh, while the old ones that I tested only had 1380 and 1400 mAh. I have thrown the old batteries out.

Calibration

When the batteries in the uConsole are changed, you may need to calibrate the power controller so it knows the maximum capacity of the new batteries and can calculate the right percentage to show in the on-screen battery indicator.

This is covered on the Battery Calibration page.

Links

Batteries

-

Amazon - Authentic Samsung30Q, 3.7V Flat Top Real 3000mAh 18650 Battery Rechargeable 30Q (4 Pack)

While reading about peoples' experiences with "fake" batteries, I found several web sites which recommended buying only genuine Panasonic or Samsung batteries. These are advertised as "Authentic Samsung", so I'm hoping they will last longer than two hours.

These arrived on 2026-01-06. The word "Samsung" doesn't appear anywhere on the batteries or packaging, but so far they seem to be a lot better than the previous "fake" batteries I was using.

The first thing I did was put them on a charger. Unlike the previous "fake" batteries I was using (which went from empty to "full" in less than two hours), I had to charge them for over four hours before the charger considered them "full", and the uConsole immediately recognized them as 100% full, rather than showing a seemingly random number between 25% and 70%.

Charger

-

This unit can charge up to four 18650 batteries at once, and can also charge normal AAA/AA/C batteries.

It also has a capacity test function which charges the battery to full, discharges it to empty, then charges it back to full again, measuring how much power the battery "takes" when charging from emtpy back to full.

Power Testers

These are little gadgets that plug in between the charging cable and the uConsole, to show how much power the uConsole is drawing.

-

This connects between the charging cable and the uConsole. The display cycles between volts, amps, and watts every few seconds. If you have a laptop or other device which draws more power, this one says it can measure up to 240W (i.e. 5A at 48V).

-

This also connects between the charging cable and the uConsole. The product description says that it shows volts, amps, and watts, however I've only ever seen it show watts. It also shows a "PD" indicator when the connection was negotiated using USB-PD (seen while charging an iPad). This one says it can measure up to 100W (5A at 20V).

Other

Related links (mostly Clockwork Pi Forum posts) I used while writing this page, not in any particular order.

- uConsole: How Does Charging Work?

- Battery level indicator?

- uConsole battery calibration issue (not useful by itself, but has pointers to other posts)

- uConsole not consistently charging and intermittent shutdowns

- 10'000 mAh Battery

- Axp228: properly report energy/power readings 👍 yatli wrote and describes updated axp228 driver

/sys/class/power_supply/axp20x-battery/calibrate- write 1: initiate battery full capacity calibration

- read 0: calibration disabled

- read 32: calibration enabled but not active

- read 48: calibration in progress

Battery Calibration

When you put a new set of batteries into the uConsole, the power controller needs to "learn" the capacity of the new batteries. This process is known as "calibration". Until you calibrate the power controller, it won't know the real capacity of your batteries, and may report 65% when they're empty, or (as mine were when I started writing this page) 34% when they've been charging overnight and are presumably full.

I've seen several web pages, mostly on the Clockwork Pi forums, which tell how to do this. Every set of directions is either a copy of the same three sentences, or a reference to those three sentences.

It seems to me that some details are missing, so I'm writing this page to try and provide directions which are (1) more complete, and (2) more detailed. A big part of this is because I'm currently trying to do the calibration process and seeing some really weird results.

My Experience So Far

A year ago I bought a set of 18650 batteries with a stated capacity of 5000 mAh, to use with a different device. I didn't notice anything wrong with them at the time, but I also had never used 18650's before so I didn't really have a good idea of what to expect.

Using the batteries I had ordered a year ago ...

-

I left the uConsole plugged in and running overnight, with a tester between the cable and the uConsole to show how much power was being transferred. When I started, the power cable was supplying 9W, presumably to run the system and charge the batteries.

-

In the morning it showed the battery level at 68%, and the power cable was supplying 4W.

-

I ran the command to start the calibration process, unplugged the power cable, and ran a command to "exercise" the CPU (computing SHA256 checksums of 1GB blocks of data from

/dev/urandom). -

About 2h30m later, the system died.

-

I plugged the power cable back in to charge the batteries. The tester on the power cable showed that it was supplying 9W.

-

After about two hours this dropped from 7W to 3W, then over the next half hour it gradually drifted down to zero. This tells me that the power controller stopped charging the batteries, presumably because the batteries were full.

-

When I powered the system back on, the power controller reported the batteries were only 43% full ... and with the power cable connected and supplying 4W, the reported battery level drifted DOWN to 25% over half an hour, and is now sitting at a steady 25%.

-

I then unplugged the cable and let the uConsole run from just the batteries. It died in about 1h45m.

Fake Batteries

While researching this page, I came across several pages which explained that, with the battery chemistries being used today, 18650 batteries cannot hold more than about 3600 mAh each. This means that the batteries I was using, did not have the capacity they claimed to have. Two of these pages also recommended buying genuine Panasonic or Samsung batteries.

Back on Amazon, I found a listing for "Authentic Samsung" batteries. I took the chance and ordered these, along with a charger with a capacity tester.

- Authentic Samsung30Q, 3.7V Flat Top Real 3000mAh 18650 Battery Rechargeable 30Q (4 Pack)

- IMREN 18650 Capacity Tester,18650 Battery Charger with Discharge & Testing Function, 21700 Battery Charger with LCD Screen Display Capacity Suit for 18650 21700 20700 1.2V Ni-MH/Ni-CD LiFePO4 Battery

The capacity tester showed all four of the new batteries having 2890-2960 mAh each, and two of the older batteries I had been using, having 1380 and 1400 mAh (I stopped testing and threw all six of them out, not worth wasting the time to prove that junk is junk).

I've been using the new batteries for about a week, they last over four hours in the uConsole.

Procedure

To calibrate the power controller for a new set of batteries ...

-

If you have a power tester (see below), plug it in between the power cable and the uConsole so you can monitor how much power the is being consumed, rather than having to rely only on the incorrect information from the uConsole's charging circuitry. (After all, the whole point of calibrating the power controller is to be able to trust the information it provides.)

-

If the uConsole is configured with any "auto shutdown" or "battery saver" featires, disable them. We're going to be deliberately running the batteries down to empty, which means we want the machine to not shut down by itself.

-

Plug in the charging cable and wait until the batteries are full.

If you're using a power tester, make sure it's showing how much power (watts) the uConsole is consuming. If it's showing volts and amps, you can multiply the values to get watts (i.e. 1.8A at 5V => 9W).

You can watch the state of the battery using

upower, which is normally included with the Raspberry Pi images.-

Run

upower -eto list all power-related devices. The devices will have names that look like/org/freedesktop/Upower/devices/..., the internal battery controller will have a name containingapx20x_battery. -

Run

upower -i DEVICEto see the properties of that device.

BAT=$( upower -e | grep 'apx20x_battery' ) watch -n5 "upower -i $BAT | egrep '(state|percentage):'"You can leave the

watchcommand running in a terminal window while performing the steps below, this will run the command over and over every five seconds so you can watch the numbers as they change.When the batteries were full, or at least when I thought the batteries were full, I noticed the following:

- In the

watch ... upower -ioutput, thestatevalue started changing betweencharginganddischarging. - On the power meter, the usage dropped from 9W to 2W.

-

-

Tell the power controller to start the calibration process. In a terminal window ...

echo 1 | sudo tee /sys/class/power_supply/axp20x-battery/calibrate -

Unplug the power cable, so the uConsole is running on batteries.

-

Let the unit run until the battery dies. This can take a while.

If you'd rather not wait so long, you can do things on the system to increase it's power consumption. On my uConsole, I ran the following command in a terminal window to keep the CPU busy:

while true ; do dd status=none bs=1G count=1 if=/dev/urandom | sha256sum ; done | cat -nThis command makes the kernel generate 1 GB of random numbers (reading from

/dev/urandomgenerates random bytes), calculates the SHA256 checksum of those numbers, and prints the result, over and over until the system dies. (Thecat -nat the end puts a count in front of each checksum, in case you're curious how many GB of random data have been processed.) -

When the uConsole dies, plug the power cable in, but do not power it on.

-

Wait until the batteries are full. If you're using a power tester on the wire, you'll see the power consumption drop to zero when this happens. If not, all I can suggest is to let the unit charge overnight.

-

When you power the unit on, it should have an accurate picture of the batteries' true capacity, and the percentages should be accurate.

Software

Coming soon.

Below is a quick list of what I plan to include on the page when I write it.

- Clockwork Pi "factory" images

- outdated, buggy

- Rex images - what I'm using

- Debian release names (bookworm/trixie)

- what the "lite" release is

- uConsole-Image-Builder images - tried one, had issues (screen rotation etc.)

- how to write an image to a card

- Keyboard firmware

- 4G extension card firmware

git

This section contains pages dealing with the git source code control system.

Fix a Commit before Creating a New Branch

2024-06-20 jms1

Our official workflow at $DAYJOB is to commit all work to a ticket-specific feature branch, and then create a pull request to get it merged into the primary branch. This allows people other than yourself to review your work before it gets merged into the main code.

I'm not perfect, sometimes I forget to create a new branch first, and accidentally create commits directly on the primary branch. Usually I realize this before pushing anything, which means I can fix it on the local machine first.

Quick Explanation

What we're going to do is this:

-

Create the new branch, pointing to the last of the new commits.

-

Move the

mainbranch to point to what it was pointing at before we started creating commits.

Starting Condition

In this examples below, we're going to assume that the recent commits in the repo look like this:

$ git tree1 -a

* 67f8356 (HEAD -> main, origin/main) 2024-06-20 jms1(G) ABC-123 typo

* 8a837d6 2024-06-20 jms1(G) ABC-123 new feature

* 1d3158c 2024-06-13 jms1(G) Merge branch 'ABC-101-previous-feature'

|\

| * d60b020 (origin/ABC-101-previous-feature) 2024-06-12 jms1(G) ABC-101 previous feature

|/

* 3accd26 2024-05-29 jms1(G) ABC-93 old feature

ℹ️

git tree1This is one of my standard git aliases.

In this case, I created two commits, 8a837d6 then 67f8356, then realized I should have created a feature branch for it first.

Create the new branch

Part of what you need to accomplish is creating a new branch, pointing to what should be the HEAD of that branch. Luckily, the current HEAD is already pointing to that commit, so if we just create the new branch here, we'll be good.

$ git branch ABC-123-new-feature

Looking at the repo after this, you can see that the new "ABC-123-new-feature" branch exists and is pointing to the correct commit.

$ git tree1 -a

* 67f8356 (HEAD -> main, origin/main, ABC-123-new-feature) 2024-06-20 jms1(G) ABC-123 typo

* 8a837d6 2024-06-20 jms1(G) ABC-123 new feature

* 1d3158c 2024-06-13 jms1(G) Merge branch 'ABC-101-previous-feature'

|\

| * d60b020 (origin/ABC-101-previous-feature) 2024-06-12 jms1(G) ABC-101 previous feature

|/

* 3accd26 2024-05-29 jms1(G) ABC-93 old feature

Move the main branch

This will "move" the head of the main branch to point to the commit that it had before we started working.

Identify the commit where the branch should point

First, identify the commit that it should be pointing to.

In this example, it should be pointing to commit 1d3158c. You can refer to the commit using its hash, or using any other branch or tag name which points to that commit. In many cases, origin/main will be usable.

Check out the main branch

$ git checkout main

At this point the repo will look like this:

$ git tree1 -a 67f8356

* 67f8356 (HEAD -> main, origin/main, ABC-123-new-feature) 2024-06-20 jms1(G) ABC-123 typo

* 8a837d6 2024-06-20 jms1(G) ABC-123 new feature

* 1d3158c 2024-06-13 jms1(G) Merge branch 'ABC-101-previous-feature'

|\

| * d60b020 (origin/ABC-101-previous-feature) 2024-06-12 jms1(G) ABC-101 previous feature

|/

* 3accd26 2024-05-29 jms1(G) ABC-93 old feature

In this particular example we were already on the main branch, so in this case this wasn't really necessary. However, you should get in the habit of using git checkout first, since that controls which branch git reset will be modifying.

Move the main branch

The git reset command changes what the current branch points to.

$ git reset --hard 1d3158c

At this point the repo will look like this:

$ git tree1 -a 67f8356

* 67f8356 (origin/main, ABC-123-new-feature) 2024-06-20 jms1(G) ABC-123 typo

* 8a837d6 2024-06-20 jms1(G) ABC-123 new feature

* 1d3158c (HEAD -> main) 2024-06-13 jms1(G) Merge branch 'ABC-101-previous-feature'

|\

| * d60b020 (origin/ABC-101-previous-feature) 2024-06-12 jms1(G) ABC-101 previous feature

|/

* 3accd26 2024-05-29 jms1(G) ABC-93 old feature

As you can see ...

-

The

mainbranch now points to the commit that it would have pointed to if we had created the new branch before creating any commits. -

The new

ABC-123-new-featurebranch points to the most recent commit in the work you've already done.

Keep working

At this point, the problem is fixed. You can continue working as if you had created the branch before starting, including pushing the new branch to a remote and creating a pull request.

Changelog

2024-06-20 jms1

- Created page (from notes when I actually made this mistake)

Multiple Remote URLs

When you clone a git repo to your machine, it creates a "remote" called origin, pointing to wherever you cloned the repo from. This allows you to use commands like git fetch and git pull to download updates from the same source, and git push to upload changes back to that source.

In some cases, you may want a repo to have multiple "remotes". There could be any number of reasons for this, I do it in order to maintain backup copies of my own repos in case the repo's "primary" server is unavailable.

There are a few ways to handle this.

Multiple Remotes

Repo directories can have multiple "remotes". As a (contrived) example ...

$ git remote -v

foks foks://(redacted)/jms1.info (fetch)

foks foks://(redacted)/jms1.info (push)

github git@github.com:kg4zow/jms1.info (fetch)

github git@github.com:kg4zow/jms1.info (push)

With this kind of configuration, you can pull or push commits from either remote, as needed. I've done this with repos in the past, usually as part of a migration from one git hosting service to another.

For example, to copy the repo from FOKS to Github, I could do something like this:

git fetch -p foks

git push --all github

git push --tags github

One problem with doing this is, you have to keep track of which remotes have which commits, and be sure to use the correct remote names with your commands.

Setting this up

Assuming you've cloned a repo from a remote called origin ...

-

If you're creating a new repo on a remote host (such as Github), be sure to create an empty repo. If it offers you any options to set up a README or LICENSE file, don't use them. These options work by starting an empty repo and adding a commit which adds those files. Pushing an existing repo into a non-empty repo is ... not imposisble, but it's not simple (you have to "merge" the two).

-

On your local machine, use

git remote addto create a new remote.git rmeote add github git@github.com:kg4zow/jms1.info -

Many services (like Github) will set a repo's "primary" branch to the first branch pushed into the repo. In order to avoid later issues, your first push should contain only the branch that you consider to be "primary".

I normally use

mainas the primary branch name in my repos.git checkout main git push github main -

Push the other branches, and any tags.

git push --all github git push --tags github

At this point, the new github remote contains every commit, branch, and tag that the .git/ directory on your workstation knows about.

Multiple URLS in the Same Remote

Another option is to add multiple "push" URLs to the same remote.

With this configuration, every time you push commits to that remote, it will push them to all of the URLs, one after the other.

I do this with most of my personal repos, in order to have "backup copies" of my repos in case their "primary servers" are not available.

Setting this up

As an example, this is how I set up the repo holding the jms1.info site's source code. (These are the actual commands I typed on my workstation when I added Github as a second URL for this repo on 2025-10-17.)

-

Create the Github repo.

gh repo create --public kg4zow/jms1.info -

Clone the existing FOKS repo to a new directory.

cd ~/git/ git clone foks://(redacted)/jms1.info jms1.info -

Add the Github URL to the existing remote.

cd ~/git/jms1.info/ git remote set-url --add origin git@github.com:kg4zow/jms1.info -

Push the contents to Github.

git push main git push --all git push --tagsNote that these commands don't require a "remote" name, because the repo (the directory on my workstation) only has one remote, called

origin.Also note that these

git pushcommands did try to push commits to FOKS. However, nothing was actually pushed because the FOKS server already had the commits and tags I was pushing.

From this point forward, whenever I make changes and push commits, the git push command pushes those changes to both locations, one after the other.

My Configuration

I've been using git for over ten years. Over that time I've found a collection of configuration options that seem to work well for me. I normally configure all of my workstations with these options.

These options includes a collection of "aliases" which really make my life easier.

Configuration Options

Identity

These options set the default name, email, and PGP key used for commits. The values of the options are different on personal and work machines.

-

Personal machines

git config --global user.name "John Simpson" git config --global user.email "jms1@jms1.net" git config --global user.signingkey "0xE3F7F5F76640299C5507FBAA49B9FD3BB4422EBB" -

For

$DAYJOBmachinesgit config --global user.name "John Simpson" git config --global user.email "jms1@domain.xyz" git config --global user.signingkey "0x1234C0FFEEC0FFEEC0FFEEC0FFEEC0FFEEC0FFEE"

Note that I also have aliases for cases where I might need to sign a commit using my personal "identity", on a $DAYJOB workstation. Because my PGP and SSH keys are stored on Yubikeys, I can just plug the "other" Yubikey into the machine and use the correct alias.

These aliases are documented below.

For all machines

I use these configuration options on every machine.

git config --global core.editor "nano"

git config --global core.excludesfile "$HOME/.gitignore_global"

git config --global credential.helper "cache --timeout=300"

git config --global init.defaultBranch "main"

git config --global clone.defaultBranch main

git config --global log.showSignature true

git config --global push.default "simple"

git config --global pull.rebase false

git config --global gpg.ssh.allowedSignersFile "$HOME/.config/git/allowed_signers"

All of my commits and tags are signed. This is a requirement at $DAYJOB, and a good idea in general.

git config --global commit.gpgsign true

git config --global tag.gpgSign true

Commit message template

This sets up a text file which is used as a template when git uses a text editor to create or edit a commit message.

In particular, I generally use the 50/72 format format when writing commit messages. As you can see below, having the ^ marks at 50 and 72 characters makes it easier for me to stay within the limits.

-

To configure the file:

git config --global commit.template "$HOME/.stCommitMsg" -

The contents of the file:

$ cat ~/.stCommitMsg # 50 ^ 72 ^ # First line: start with ticket number(s), limit to 50 characters # BLANK LINE # Additional lines: limit to 72 characters $ git config --global commit.template "$HOME/.stCommitMsg"

Note that empty lines and lines starting with # are not included in the actual commit message.

Aliases

Aliases allow you to "make up your own git commands". For example, if you were to do this ...

git config --global alias.showfiles "show --name-only"

... then git showfiles would be the same as git show --name-only.

My Usual Aliases

These are the aliases I've built up over the years. Some of these I use dozens of times every day.

git config --global alias.log1 "log --oneline --no-show-signature --abbrev=8 '--pretty=tformat:%C(auto)%h%d %C(brightcyan)%as %C(brightgreen)%al(%G?)%C(reset) %s'"

git config --global alias.tree "log --graph --decorate"

git config --global alias.tree1 "log --date-order --decorate --graph --no-show-signature '--pretty=tformat:%C(auto)%h%d %C(brightcyan)%as %C(brightgreen)%al(%G?)%C(reset) %s'"

git config --global alias.tagdates "log --tags --simplify-by-decoration --pretty=\"format:%ai %d\" --no-show-signature"

git config --global alias.taghashes "log --tags --simplify-by-decoration --pretty=\"format:%H %d\" --no-show-signature"

git config --global alias.id "describe --always --tags --long --abbrev=8 --dirty"

git config --global alias.top "rev-parse --show-toplevel"

Changes for older git versions

Some colours and tags were added between git 2.16.5 and 2.37.0.

%as(commit date YYYY-MM-DD) ->%adwith--date=shortoption%al(author email local part) ->%an(author name)

For older versions without these newer colour codes, I use these aliases instead.

git config --global alias.log1 "log --oneline --no-show-signature --abbrev=8 --date=short '--pretty=tformat:%C(auto)%h%d %C(cyan)%ad %C(green)%an(%G?)%C(reset) %s'"

git config --global alias.tree1 "log --date-order --decorate --graph --no-show-signature --date=short '--pretty=tformat:%C(auto)%h%d %C(cyan)%ad %C(green)%an(%G?)%C(reset) %s'"

Sign commits using specific keys

These aliases allow me to sign commits using my personal PGP key on the work machine, or vice-versa, by physically plugging the correct Yubikey into the machine. By themselves they won't be very useful to anybody else, but they could be useful as examples if you need to use different keys for different repos.

git config --global alias.commitp "commit --gpg-sign=E3F7F5F76640299C5507FBAA49B9FD3BB4422EBB --author='John Simpson <jms1@jms1.net>'"

git config --global alias.commitw "commit --gpg-sign=1234C0FFEEC0FFEEC0FFEEC0FFEEC0FFEEC0FFEE --author='John Simpson <jms1@domain.xyz>'"

Notes

Random notes relating to git

Commits signed with SSH keys

There used to be a brief explanation here, I've moved this to its own page.

⇒ Signing commits with SSH keys

Configuration scope

The git config command operates on different files, depending on which options you give it.

| Option | File | Scope |

|---|---|---|

--local (or none) | REPO_ROOT/.git/config | the current repo |

--global | $HOME/.gitconfig | the current user |

--system | /usr/local/etc/gitconfig | all users on the system |

--worktree | WORKTREE_ROOT/.git/config.worktreeor REPO_ROOT/.git/config | the current "worktree" or --local if no worktree is active |

--file ___ | specified | depends on the file |

Changelog

2025-04-30 jms1

- Fixed a typo

- Removed info about git commits signed by SSH keys, since there's now a dedicated page for that

2024-06-20 jms1

- Created this page (from pre-existing notes)

Signing commits with SSH keys

Newer versions of git support the ability to sign commits using an SSH key.

Specifically ...

- Git v2.34 or higher

- OpenSSH 8.0 or higher

🛑 Don't Do This

I personally think that using SSH keys to sign commits is a bad idea.

The main reason I say this is because SSH keys are not linked to specific identities. An SSH key pair is JUST a pair of numbers - there's nothing about an SSH key which says "I belong to John Simpson". If I don't tell you which SSH key I actually use, you have no way to know whether a given key belongs to me or not.

Git allows users to set their own name and email address when creating commits. This means that anybody can create a commit which claims to be made by any name and email address. Somebody could use your name and email address on a commit, and sign the commit using an SSH key that they created on their own. It wouldn't be the correct key (i.e. the key that you normally use), but it would be signed, and the commit would have your name and address as the author ... so if somebody was looking at the commit but not verifying the signature, there's a good chance this would be enough to fool them.

If a command like git show is looking at a commit which was signed using an SSH key, all it can really tell for sure is which SSH key signed it. It doesn't know who that key actually belongs to, unless you tell it which keys belong to which people. (I explain how to do this below.)

This is why you need to verify the signatures on commits - to be sure that the person who the commit says is the author, actually is the author.

Verifying commits signed by SSH keys involves some extra configuration which isn't required for commits signed by PGP keys.

Another reason for not using SSH keys to sign commits is that, if the secret key is compromised, there is no way to revoke the key. If your secret key escapes, YOU have to hunt down every person who has a copy of your public key and tell them to stop using it.

Again, I personally don't use SSH keys to sign commits, but I do feel like people should be allowed to make their own decisions. As long as you understand the risks, if you want to do it, more power to you.

Overview

There are two basic operations involved with signed commits, no matter what key type is used: signing commits, and verifying signatures.

Git needs to be configured for both of these.

Signing Commits

There are a few things to configure in order to make git sign your commits.

Sign one commit

Creating a signed commit is done by adding the -S (uppercase) or --gpg-sign option to your git commit command line, like so:

git commit -S -m 'commit message'

Note that only that one commit will be signed.

Tell git to sign commits by default

You can also configure git to sign commits by default, using this command:

git config --global commit.gpgsign true

After doing this, every git commit command will create a signed commit, without your having to include the -S option in the command.

ℹ️ Create an un-signed commit

If you do this and need to occasionally not sign a commit, you can add the

--no-gpg-signoption to thegit commitcommand, and that one commit will be created without a signature.

Documentation for the commit.gpgsign option

Tell git to use an SSH key when signing commits

Git normally uses a PGP key to sign commits. Setting this config option tells it to use an SSH key instead.

git config --global gpg.format ssh

Documentation for the gpg.format option

Tell git which SSH key to use

We can do this in one of two ways:

-

Use the filename to the public key file:

git config --global user.signingKey $HOME/.ssh/id_rsa.pub -

Include the public key itself in git's configuration:

git config --global user.signingKey 'key::ssh-ed25519 AAAA... comment'

Documentation for the user.signingKey option

Verifying Commits

Before git will be able to verify commits, it first needs to be told which SSH keys belong to which people. We do this by creating an "allowed signers" file, which is a simple text file containing email addresses and SSH public keys.

Create an Allowed Signers file

The file can be created wherever you like, as long as it's readable by the git command itself. The example below will use $HOME/.git_allowed_signers as the filename, but you can use whatever filename you like.

Each line in the file should contain ...

- One or more email addresses. If multiple email addresses use the same key, the addresses should be separated by commas.

- An SSH public key, optionally including the same coments you normally see after the key itself.

For example ...

jms1@example.com ssh-ed25519 AAAAC3NzxxxxxxxxxxxxxxxxxxxxxxxxxRK4m 2025-04-29 fake key

Documentation for the Allowed Signers file format is in the ssh-keygen(1) man page. If your system isn't set up to view man pages, this web page has a copy, however OpenSSH 8.0 was the first version to use an Allowed Signers file and I'm not sure what version of OpenSSH this web page was taken from.

ℹ️ Trust the man pages

You should always trust the documentation in your system's man pages over anything you find online. This is because the man pages are installed with the software itself, so they're guaranteed to be the same versions.

This is especially true of programs like OpenSSH, where new options are being added with every new version.

Tell git where to find the Allowed Signers file

This is just another git config command.

git config --global gpg.ssh.allowedSignersFile $HOME/.git_allowed_signers

Documentation for the gpg.ssh.allowedSignersFile option

Tell git to always show signatures

This is optional. I do it as part of the git configuration on all of my machines.

This will make every git log or git show command use the --show-signature option by default, so you won't have to remember to include it in every command.

git config --global log.showSignature true

Messages

You may run into the following messages when working with SSH-signed commits.

Good "git" signature

Good "git" signature for user@example.com with RSA key SHA256:SHA256:oSAqmDr7/LKI+STyBrT79IcFmbyt3h1P1niXdg0I+94

✅ This is good. It means that the commit was signed by an SSH key which is in your Allowed Signers file.

This message is actually telling you three things:

-

Good "git" signaturetells you that the commit's contents and message haven't been modified since the signature was created. -

for user@example.comtells you whose SSH key signed the message. -

with RSA key ...gives you the fingerprint of the key which signed the commit.

No principal matched.

Good "git" signature with RSA key SHA256:oSAqmDr7/LKI+STyBrT79IcFmbyt3h1P1niXdg0I+94

No principal matched.

❓ This means that the commit was signed by an SSH key that isn't recognized (i.e. isn't in your Allowed Signers file).

This mesage is actually telling you three things:

-

Good "git" signaturetells you that the commit's contents and message haven't been modified since the signature was created. -

with RSA key ...gives you the fingerprint of the key which signed the commit. -

No principal matchedmeans that it can't tell you who signed the commit.

If you're able to contact the commit's author and get a copy of their SSH public key, you can get its fingerprint using ssh-keygen -l, like so:

$ ssh-keygen -l -f their_id_rsa.pub

4096 SHA256:oSAqmDr7/LKI+STyBrT79IcFmbyt3h1P1niXdg0I+94 user@example.com (RSA)

As you can see, the fingerprint printed by this command matches the fingerprint shown by the git show or git log command, so you can be sure that this commit was signed by that person.

gpg.ssh.allowedSignersFile needs to be configured and exist

❓ If you work with repos containing commits signed by SSH keys, and git is showing signatures, you may see this message when looking at commits that were signed by SSH keys:

error: gpg.ssh.allowedSignersFile needs to be configured and exist for ssh signature verification

The message means exactly what it says: either you haven't configured the gpg.ssh.allowedSignersFile option, or the file that it points to doesn't exist.

If you don't want to see this message, there are a few options:

-

Use the

--no-show-signatureoption in your command.git show --no-show-signature a1b2c3d4This option will override the

log.showSignatureconfig option. -

You can configure git to use an Allowed Signers file, but leave the file empty.

> $HOME/.git_allowed_signers git config --global gpg.ssh.allowedSignersFile $HOME/.git_allowed_signersNote that the first command starts with

>. This will create the file if it doesn't already exist, and empty the file if it does.ℹ️ Shell Output Redirect Operator

This is the same

>operator that you would use to send the output of a command to a text file. In this case we're not running a command, so we're sending nothing to the file. -

You can configure git to use

/dev/nullas the Allowed Signers file.git config --global gpg.ssh.allowedSignersFile /dev/nullThis is just like setting up an Allowed Signers file and leaving it empty, except that you don't have to create the file at all. The

/dev/nullfile always exists on macOS and Linux systems, and if anything (includinggit) tries to read data from it, it will act like any other empty file.

Changelog

2025-04-30 jms1

- Created page

Keybase

Keybase is a system which provides end-to-end encrypted services, including ...

- Text chat, between groups of specific people, and within "teams".

- Cloud file storage, with the files accessible to yourself, a specific set of people, or a team.

- Git repositories, accessible to yourself or to a team.

- Encrypting, decrypting, signing, and verifying encrypted messages which can be sent by some other method (such as email, or a "corporate approved and monitored" chat system).

Keybase also provides a way to prove your identity on some other service, and to allow others to find you based on those identities. These services include Github, Reddit, and Hackernews. You can also "prove" that you own specific DNS domains and web sites.

You can also attach PGP keys to your Keybase account. This was actually Keybase's original application, as a way to find other peoples' PGP keys when all you knew them as, was a username on a site like Reddit.

For example, if you only know somebody as "kg4zow on Github", if a Keybase user has proven that they own that Github user (hint: I did), you can use Keybase to chat or share files with them, secure in the knowledge that the person you are communicating with on Keybase is the same person as "kg4zow on Github".

KBFS: Cloud File Storage

KBFS, or Keybase Filesystem, is a cloud file storage system. Keybase provides 250 GB of encrypted cloud storage for each user, as well as 100 GB for each team. This storage can be accessed by any Keybase client which is registered as a device on a user's account.

Each user also has a public directory available, whose contents can be viewed by any other Keybase user. For example, if you're logged into Keybase you can look in /keybase/public/jms1/ to see the files that I'm sharing with the world.

FUSE and Redirector

For Linux, macOS, and ms-windows systems, Keybase provides a way to "mount" KBFS so it appears as part of the machine's filesystem. The details are different for each operating system, but Linux and macOS both use a FUSE (Filesystem in USErspace) module to translate "file accesses" to the appropriate API calls needed to upload and download encrypted blocks from Keybase's cloud servers.

It's possible for multiple people to be logged into a computer at the same time, so Keybase needs to ensure that different users on the same machine can't see each others' Keybase files. The mechanics of how this happens are different for each operating sytem.

I don't want to go into a lot of technical detail, so the short version is this:

-

Each user on a computer has their own "view" of KBFS, mounted in a different directory.

-

KBFS uses a thing called a "redirector", which redirects file accesses to the user-specific mount directory for whatever user is accessing it.

-

On Linux, the redirector is mounted as

/keybase. -

On macOS, the redirector is mounted as

/Volumes/Keybase. Some systems may also have/keybaseas a symbolic link pointing to/Volumes/Keybase.

-

The idea is, all users on the system can use paths starting with /keybase/, and they will see their own "version" of KBFS, containing the files that they have access to.

Because of this, the normal way to write the names of files stored in KBFS is using paths starting with /keybase/.

ms-windows

You will note that I didn't mention ms-windows at all. This is because I haven't used ms-windows since the days of "windows 7", and I don't remember the details of how KBFS works on windows.

I have a vague memory of there being a third-party program which needs to be installed - a quick web search tells me that what I'm thinking of is probably Dokan. I don't remember if this is distributed with the Keybase installer, or if you have to download and install it yourself.

KBFS Directories

KBFS has three high-level categories of directories: public, private, and team. Under these categories, folders "exist" whose name tell who have access to them.

Public

-

/keybase/public/alice/is readable by anybody, but only writable by Alice. -

/keybase/public/alice,bob/is readable by anybody, but only Alice and Bob are able to write to it. (This is not something you see a whole lot, but it works if you have a need for it.)

Private

-

/keybase/private/alice/is only accessible by Alice (or technically, by devices on Alice's account). -

/keybase/private/alice,bob/is accessible to both Alice and Bob. -

/keybase/private/alice,bob#charlie,david/is accessible to Alice, Bob, Charlie, and David.- Alice and Bob (before the

#) are able to read and write files. - Charlie and David (after the

#) are able to read the files but not write them.

As you can see, it's possible to create private folders where different people have different access. However, once that folder exists, the list of who has what access can never change. If you need to remove somebody's access, or change them from read-only to read-write, your only option is to create an entirely new folder whose name is the new list of who has what access, and move the files from one to the other. The old one will still "exist", it'll just be empty.

Keybase added "Teams" as a way to deal with this problem. Users can be added or removed from a team, or have their roles changed, without needing to change any team or directory names.

- Alice and Bob (before the

Team

-

/keybase/team/xyzzy/is accessible by Keybase users who are members of thexyzzyteam. Each user's role within the team controls what access they have to the files in the team's folder. -

/keybase/team/xyzzy.dev/is accessible by Keybase users who are members of thexyzzy.devteam. This is a "sub-team" of thexyzzyteam. (Sub-teams are explained below.)

ℹ️ The user and team names shown above are all examples. I don't know if there are users or teams with those names.

Teams are explained in more detail below.

Space

I mentioned this above, but to make it more obvious ...

- Each user is given 250 GB of storage for free.

- Each team is given 100 GB of storage for free.

- There is currently no limit to the number of teams which can be created.

The one restriction is, teams cannot have the same name as a user. This means that, because I already have the username jms1, I could not also create a team called jms1.

Teams

"Teams" are groups of Keybase users. Users can be added to or removed from teams dynamically.

This is different than a "group of users" situation. A "group chat" between Alice, Bob, and Charlie will only ever contain those people. If you try to add a fourth person, it creates a new group chat between those four people. The original three-way chat will still exist, and the fourth person will never be able to access it.

-

When users are added to a team, they will have access to the team's chat history, shared files, and git repos.

-

When users are removed from a team, they will immediately no longer have access to the team's chat history, shared files, or git repos. (If they previously saved anything they will still have access to their own copies, but they won't be able to access

Roles

Users who are added to a team will be able to see the team's chat history, shared files, and git repositories, subject to their "role" within the team.

Available roles are:

-

reader= can participate in team chat rooms, has read-only access to the team's KBFS folders and git repositories. -

writer= same asreader, but has read-write access to the team's KBFS folders and git repositories. -

admin= same aswriter, but can add or remove team members and set their roles, up toadmin. Can also create or delete sub-teams "below" this team (so if somebody is anadminfor the teamxyzzy.dev, they could create anxyzzy.dev.iossub-team). -

owner= Can create or delete sub-teams anywhere below the top-level team, as well as add, remove, and set the role for any user in any sub-team.

Users who are an admin or owner of a team do not automatically have access to its sub-teams' chats or files. They do, however, have the ability to add themselves to the sub-team. This is referred to as "implied admin" permission. (Note that if they do this, the other team members will be able to see that the admin/owner is now a member of the team - there's no way they could give themselves access without it being visible.)

Team admins and owners can set a minimum role needed to write in the team's chat. This is normally set to reader, but can be set to writer or admin if there's a need to have people who can read the team chat but not be able to "speak" in it (i.e. an "announce-only" channel).

Sub-Teams

Teams can have "sub-teams". For example, the xyzzy top-level team might have sub-teams called xyzzy.dev, xyzzy.qa, and xyzzy.sales. Each sub-team has its own list of members, with their own roles for that team.

Keybase Sites

Keybase Sites provides simple web hosting for sites containing static files.

Keybase originally had a web site using the keybase.pub domain, where every user's /keybase/public/xxx/ directory could be viewed. This service was taken down in ... I want to say 2023-02?

They also have a service which can host static pages stored in almost any Keybase directory, using a custom domain name that you own. This is how I'm hosting the jms1.info site (where you're presumably reading this right now).

The documentation is a bit outdated. You can ignore anything that mentions the keybase.pub domain, but the "Custom domains" section still works exactly as described.

My Experience

I've been using keybase since 2017. I've had very few problems with it, and the problems I have had were mostly related to Apple making low-level changes to macOS, and Keybase/Zoom not using the beta versions to test the client before the new macOS is released to the public.

One thing I did find interesting ... when Apple first released computers with the "Apple Silicon" processors, I had an M1 MacBook Air. The Keybase app hadn't been updated to support it yet, and at the time nobody at Keybase had an M1 machine to try it with. One of the Keybase devs sent me what he thought should be a working client, and I was able to test it for them and send back some log files. Keybase released the first client which supported the M1 processor about a week later.

Your Keybase Account

Coming soon.

Keybase on macOS

Coming soon.

Before You Lose Access

Hopefully you're reading this page BEFORE disaster strikes.

I've seen a lot of cases where people create a Keybase account and then lose access to it, because they didn't read the documentation, or they were in a hurry and skipped over steps, or in a few cases, because they created their accounts long enough ago that some of the warnings that the current client shows, didn't exist at the time.

This page will explain a few things that you should do, while you have access to your account, so that if something goes wrong you won't lose access to everything stored in your account.

Background

Multiple Devices

🛑 MAKE SURE YOUR KEYBASE ACCOUNT HAS MULTIPLE DEVICES ON IT. 🛑

This is the most important thing on this entire page.

Keybase encrypts things (chat messages, files, git commits, etc.) using encryption keys which are specific to each device. These keys are stored on each device, and are never sent to any Keybase server.

If you lose the encryption keys for every device on your account, you will lose access to everything stored in the account. This includes ...

- Chat history

- Files stored in KBFS

- Git repositories

If these things are accessible by other people or teams, those other people will still have access, but you won't.

I know I said it above, but I'll say it again.

🛑 MAKE SURE YOUR KEYBASE ACCOUNT HAS MULTIPLE DEVICES ON IT. 🛑

Adding devices to your account

Keybase has clients for Linux, macOS, ms-windows, Android, and iOS. Their web site has directions for how to download and install the software, as well as how to add the device to your existing account.

If you don't physically have a second device that you can install Keybase on, you can create a "paper key".

In fact, even if you have a dozen devices with Keybase installed, you should create a paper key.

If you don't know what devices are on your account, check the "Devices" tab in your Keybase app, or visit https://keybase.io/___/devices (substitute your username where you see ___ in the URL).

Paper Keys

A paper key is a sequence of 13 words which encode a device encryption key. This key is attached to your Keybase account like a normal device key.

They are called "paper keys" because you're supposed to physically write them down on paper, and lock the paper up someplace safe.

Obviously "safe" means that other people shouldn't be able to access it, but you should also consider physical safety. If it's locked up at home, what happens if your house catches fire, or floods, or if an earthquake destroys it?

As an example, the paper keys for my own Keybase accounts are ...

- Written down on paper and stored in a fire safe at home. The paper itself has nothing on it but a collection of random words, so if somebody manages to break into the safe, they won't immediately know what the words are for - all they'll see is a collection of random words.

- In a text file, stored on an encrypted USB stick, also stored in the fire safe at home.

- On another encrypted USB stick, physically stored with a family member in a different part of the world.

This means if something happens to my house, up to and including permanent destruction, I can get the backup copies of the paperkeys from this family member. It might take a few days, but I wouldn't be permanently locked out of my accounts.

Resetting Your Account

The Keybase web site offers a way to "reset" your account. They do warn about this being a drastic action, but I don't feel like they make it "scary" enough.

🛑 Resetting your account starts a new account with the same username.

If you do this, you will permanently lose accesss to the content stored in the old account.

Even if you later find one of the old devices, it won't be able to log into your Keybase account anymore.

I explained above that if you lose the encryption keys for every device on your account, you lose access to everything stored in the account. When you reset your account, you are deleting the account entirely, and starting a new account with the same username. Other than the username, there is no connection between the old account and the new one.

You will also lose your memberships in any groups you may be part of. This also means that if your account was the only "owner" of any teams, those teams will now have no owner at all - which means they cannot be fully managed (and if there are also no users with the "admin" role, they cannot be managed at all).

The only time you should ever reset your account is if you are 110% sure that you will NEVER be able to regain access to the devices on the old account. If there is even a remote chance of regaining access to any of your old devices, I recommend starting a new account with a different username.

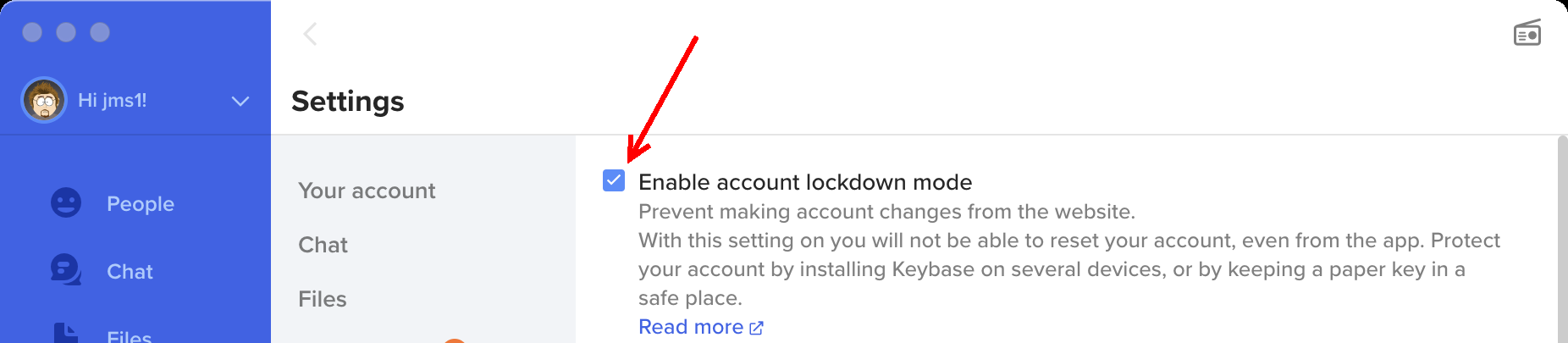

Lockdown Mode

It goes without saying that you should use a strong password for your Keybase account, and it should be a password that you aren't using for anything else.

BUT.

If somebody manages to get the password for your Keybase account, they could log into the web site as you and reset your account. Doing this wouldn't give them access to your stored information, but it would prevent YOU from being able to access it. (This is a form of "denial of service attack".)

🛑 There is no notification when an account is reset. If somebody manages to reset your account, you wouldn't know about it until you discover that you can't access your Keybase account anymore - and by then it would be too late to do anything about it.

This is not something that Keybase employees would be able to help you with. If your account is reset, whether you do it or an attacker does it, everything encrypted with the old account's device keys will be gone.

In the Keybase client, under Settings → Advanced, there is an "Enable account lockdown mode" setting. If this checkbox is turned on, Keybase will only allow the account to be reset or deleted from a logged-in Keybase device. If an attacker has your Keybase password and logs into the web site as you, the only things they could do would be to send invitations or change your notification settings.

Of course, if your account is in Lockdown Mode and you lose all of the devices, the account cannot be recovered or deleted. This means that you wouldn't be able to re-use the same username.

This makes it even more important that you not lose all of your devices, and that you have a paperkey.

⇒ This page has more details about Lockdown Mode.

ℹ️ All of my Keybase accounts have "Lockdown mode" turned on.

I'm okay with this, because I have paperkeys stored securely.

Checklist

-

Make sure your account has multiple devices attached to it.

-

Create a paperkey, write it down, and store it securely.

-

Check your devices every so often. (I check mine every few months.)

-

Check the list of devices. You can see this in the Keybase client, or by visiting

https://keybase.io/USERNAME/devices(substitute your own Keybase username forUSERNAME, obviously). Make sure that the devices you think are on the account, are actually there. Also make sure that your account doesn't have any devices which shouldn't be there. -

For phones, tablets, or computers that you may not use every day, make sure their software is up to date (especially the Keybase client itself), and that they are able to log into the account.

-

-

Enable "Lockdown Mode" on your account, but ONLY AFTER making sure you have multiple devices and a paperkey.

Lost Access

If you have lost all devices which are on your keybase account, and you don't have an active paperkey on the account (or if you've lost the paperkey) ... I'm sorry, but there is no way to recover any content stored in your account.

Resetting Your Account

There is a way to "reset" your account. This works by starting a new "signature chain" (or "sigchain") for the username. Doing this replaces the old sigchain, which means that any encryption keys currently attached to the account, will no longer be attached to the account.

ℹ️ Signature Chains

Each Keybase account has a chain of digital signatures. Each signature represents a statement by an existing device, authorizing a change to the list of encryption keys attached to the account. This happens when you add or remove devices, paperkeys, PGP keys, or identity proofs.

Signature chains are public. You can see any Keybase account's signature chain by visiting

https://keybase.io/USERNAME/sigchain. For example, this is the sigchain on my own Keybase account.

Resetting your account should only be considered as a final resort. If you do this, any content that was previously stored in your account will be permanently lost. This includes ...

-

Teams: You will be removed from any teams that you may be a member of.

-

If you were the only person with owner access to a team, resetting the account will leave the team without an owner. Other members who have admin access to the team will be able to add you back to the team as a member or as an admin, but there is no way for anybody to make you (or anybody else) an owner.

-

If you are the only person with owner or admin access to a team, resetting your account will leave that team without any way to manage members at all. Current members will remain members, but there won't be any way for anybody to add or remove members going forward.

-

Because of this, it's important to be sure that every team has multiple owners, even if the second owner is a "backup account" with a paperkey stored somewhere safe ... just like you should be doing with your primary account.

-

-

Files: You will no longer have access to any encrypted files, other than other users' public files.

-

Files under

/keybase/private/USERNAME/and/keybase/public/USERNAME/will be lost. -

Files under

/keybase/team/will still exist as part of that team, however your new account will not have access to them unless a team owner/admin adds you back to the team.

-

-

Git repositories: You will no longer have access to any encrypted git repos that your "old" account had.

-

Repos under

keybase://private/USERNAME/will be lost. -

Repos under

keybase://team/will still exist, but your "new" account won't have access to them unless a team owner/admin adds you back to the team.

Note that if you have an up-to-date clone of a Keybase repo on a computer somewhere, that could be used to create a new repo with the same commit history. However, computers need to be devices on your account in order to access Keybase git repos in the first place, so if you do have a cloned copy, chances are the computer involved is a device on your account, and you shouldn't need to reset the account.

-

-

Chat: You will no longer have access to any chat history.

-

For person-to-person (non-team) chats, the other people involved will still have access to the history, however the conversation will essentially be over. If you start a new conversation with them (or they try to chat with you) after you reset your account, it will be a new conversation.

-

For team chats, if your "new" account is added to the team again, you would regain access to the existing history.

-

If you reset your account and later find one of your devices or paperkeys, it will no longer be able to log into your account.

Essentially, the only time you should consider resetting your account is if you are 100% sure that you will never regain access to a device or paperkey which has access to the account. If there is any chance of finding one of these, you're better of creating a new Keybase account with a different username.

If your account is in Lockdown mode, the account cannot be reset except from an active device. And if you have an active device, you shouldn't need to reset the account in the first place.

I do this for my own Keybase accounts, but I also have paperkeys for each account which are physically written down and stored in safe places.

How to Reset Your Account

After reading everything above, if you're 100% sure you want to reset your account ...

- Visit keybase.io

- Use the "Login" link at the top right to log into your account. You will need the username and password.

- Near the top right of your profile will be a "gear" button.

In the Meantime

After reading everything above, it should be obvious that you need more than just one device on your account, so you will be able to regain access to your account in case you lose all of your devices.

Assuming you still have access to your Keybase account ...

- Read through the Before You Lose Access page.

- If possible, make sure there are multiple devices on your account.

- If you don't have more than one device, or if you want to be sure you have a way to regain access in case all of your devices are lost, make sure you have a paper key on your account.

Keybase Sites

Keybase Sites is a service built into Keybase which hosts web sites from KBFS directories. It can be used to host sites containing only static files - there is no provision for running any kind of server-side scripting.

I'm using it to host the jms1.info site you're reading right now.

Original write-up

https://jms1.pub/kbsites/ is the first set of information I wrote about Keybase Sites, and includes links to five different hostnames which illustrate how to host sites using five different kinds of sources:

/keybase/public/xxx//keybase/private/user#kbpbot/(private, withkbpbothaving read-only access)/keybase/team/xxx/(with thekbpbotuser as a team member with "reader" access)- git repo

keybase://team/xxx/repo, inmasterbranch (with thekbpbotuser as a team member with "reader" access) - git repo

keybase://team/xxx/repo, in some other branch (with thekbpbotuser as a team member with "reader" access)

jms1.info

Details about how I set up Keybase Sites for the jms1.info site. The site is hosted from a team directory with kbpbot as a team member with "reader" access.

Keybase Team

Create a Keybase team with the kbpbot user as a member with reader permissions. This can be either a top-level team, or a sub-team. (I'm using a sub-team.)

keybase team create jms1team.sites

keybase team add-member -s -u kbpbot -r reader jms1team.sites

Create a KBFS directory to hold the site's files. In my case, I'm using the same team to host multiple sites, so each site has its own directory within the team.

mkdir /keybase/team/jms1team.sites/jms1.info

Create a dummy index page so you can test that the site is working.

cat > /keybase/team/jms1team.sites/jms1.info/index.html <<EOF

<html>

<head>

<title>jms1.info</title>

</head>

<body>

<p>test 1 2 3</p>

</body>

</html>

EOF

DNS Records

I use djbdns to serve my DNS data. Below are the actual tinydns data lines from my DNS data, along with what I think are the equivalent records in BIND format.

-

For

jms1.info...+jms1.info:18.214.166.21:3600 '_keybase_pages.jms1.info:kbp=/keybase/team/jms1team.sites/jms1.info:3600jms1.info. 3600 IN A 18.214.166.21 _keybase_pages.jms1.info. 3600 IN TXT "kbp=/keybase/team/jms1team.sites/jms1.info"-

The record for

jms1.info.itself must be an A record, since the rules for CNAME records explicitly say that a resource record name which is a CNAME record, cannot also exist as any other kind of record. Since this record is the "root" of a domain, it must have an SOA record and one or more NS records, therefore it cannot also be a CNAME record.The IPv4 address,

18.214.166.21, is the IP thatkbp.keybaseapi.com.points to. This hasn't changed in a few years now, however if it does change in the future, I'll need to remember to update my own DNS records to match it. -

The TXT record for

_keybase_pages.jms1.info.is what tells Keybase Sites where to find the site's content. Because it points to a team folder, thekbpbotuser needs to have at least "reader" permissions in that team.

-

-

For

www.jms1.info(which does exist as a separate site, containing a meta-redirect tohttps://jms1.info/without thewww.in the hostname) ...Cwww.jms1.info:kbp.keybaseapi.com:3600 '_keybase_pages.www.jms1.info:kbp=/keybase/team/jms1team.sites/www.jms1.info:3600www.jms1.info. 3600 IN CNAME kbp.keybaseapi.com _keybase_pages.www.jms1.info. 3600 IN TXT "kbp=/keybase/team/jms1team.sites/www.jms1.info"-

The record for

www.jms1.info.is a CNAME record, pointing tokbp.keybaseapi.com. This makes thewww.jms1.infoname resolve to the same IP address(es) thatkbp.keybaseapi.compoints to, without having to worry about manually updating them ifkbp.keybaseapi.comever changes. -

The TXT record for

_keybase_pages.jms1.info.is what tells Keybase Sites where to find the site's content. Because it points to a team folder, thekbpbotuser needs to have at least "reader" permissions in that team.

-

Linux

I built my first Linux machine in 1992-11. Since then I've been using Linux on a regular basis, including almost every day since 1995-09 when I started working at my first ISP.

The pages in this section have to do with Linux, mostly system and network administration stuff.

CentOS 7 - Fix yum repos

2024-07-06

I have a fair number of scripts which run inside of containers. I do this for several reasons, including:

-

I can run things which require Linux, on my macOS workstations, using colima.

-

I can run scripts or programs which have complicated dependencies, without having to mess with installing those dependencies on every machine where I want to run the program. Everything is already installed within the container.

Many of these use the centos:7.9.2009 container (aka centos:7), because at the time I wrote them, I was using CentOS 7 on a day-to-day basis at work, both at work and personally. One of the reasons I do this at work is to build RPM packages for CentOS and RHEL systems, on my macOS machines.

CentOS 7 officially went end-of-life on 2024-06-30. At work they're paying for an extended-lifetime support contract with Red Hat, who provides us with access to a set of yum repos whose packages receive security and bug-fix updates for RHEL 7. These updated packages can only be used for RHEL 7 machines (not CentOS 7), and they can only be used for work-related machines for which the company pays a license. (It's not my money.)

We've spent the last few months replacing our CentOS 7 machines with RHEL 7 because of this. (And because somebody "higher up" heard a Red Hat employee say that convert2rhel leaves CentOS artifacts on the converted system, took that to mean it doesn't work, and ordered us not to use it, so we had to build all new VMs and migrate their programs and data by hand ... but that's a different discussion.)

vault.centos.org

CentOS has a server called vault.centos.org which contains copies of the CentOS yum repositories for retired CentOS versions, going back to CentOS 2.1.